CubeWerx Inc. |

Publication Date: 2022-05-30 |

External identifier of this CubeWerx® document: https://cubewerx.pvretano.com/Projects/CSA/doc/csa.html |

Internal reference number of this CubeWerx® document: 22-001 |

Version: 1.0 |

Category: CubeWerx® Design Document |

Editor: Panagiotis (Peter) A. Vretanos |

CubeWerx TEP Marketplace Design Document |

Copyright notice |

Copyright © 2022 CubeWerx Inc. |

To obtain additional rights of use, visit http://www.cubewerx.com/legal/ |

Warning |

Document type: CubeWerx® Inc. <Design Document> |

Document language: English |

CubeWerx proprietary and confidential disclaimer

The information contained in this document is intended only for the person or entity to which it is addressed. This document contains confidential and intellectual property of CubeWerx. Any review, retransmission, dissimination or other use of, or taking of any action in reliance upon this information by persons or entities other then the intended recipient is strictly prohibited without the express consent of CubeWerx. If you receive any content of this document by error, please contact CubeWerx and delete the material from all your computers.

- 1. Scope

- 2. References

- 3. Terms and Definitions

- 4. Conventions

- 5. Scenarios

- 6. Requirements summary

- 6.1. Overview

- 6.2. Web front end

- 6.3. Authentication & Access Control

- 6.4. User Workspace Management

- 6.5. Job manager

- 6.6. License manager

- 6.7. Notification and analytics

- 6.8. Execution and Management service

- 6.9. Catalogue

- 6.10. Application execution (ADES)

- 6.11. Cost estimation and billing (ADES)

- 6.12. Parallel execution module (ADES)

- 6.13. Results manager (ADES)

- 6.14. Storage layer

- 6.15. Federation

- 6.16. Administration

- 7. System Architecture Overview

- 7.1. Web front end

- 7.2. Execution Management Service (EMS)

- 7.3. Authentication server

- 7.4. User workspace manager

- 7.5. Job manager

- 7.6. License manager

- 7.7. The Notification & Analytics module

- 7.8. Application Deployment and Execution Service (ADES)

- 7.9. Catalogue service

- 7.10. Docker registry

- 7.11. Elastic Kubernetes Service (EKS) Container Execution Service

- 7.12. Storage layer

- 8. Security and Access Control

- 9. Workspace manager

- 10. Catalogue service

- 11. Jobs manager

- 12. License manager

- 13. Notification module

- 13.1. Event types

- 13.1.1. The user has reached 75% of their workspace size quota

- 13.1.2. The user has reached 90% of their workspace size quota

- 13.1.3. The user has exceeded their workspace size quota

- 13.1.4. A job has completed and processing results are ready

- 13.1.5. A job (i.e., process execution) has failed

- 13.1.6. An application/process has been added to the TEP Marketplace

- 13.1.7. An application/process has been updated in the TEP Marketplace

- 13.1.8. An application/process has been removed from the TEP Marketplace

- 13.1.9. A data set has been added as a TEP-holding

- 13.1.10. A TEP-holding data set has been updated

- 13.1.11. A data set has been removed as a TEP-holding

- 13.1.12. A new TEP-holdings web service is available

- 13.1.13. The data set served by a TEP-holdings web service has been updated

- 13.1.14. A TEP-holdings web service is no longer available

- 13.1.15. Another TEP user has shared a new web service with the user

- 13.1.16. The data set served by a shared web service has been updated

- 13.1.17. Another TEP user has stopped sharing a web service with the user

- 13.2. Notification methods

- 13.3. Database tables

- 13.4. Event Occurrences

- 13.5. Notification Daemon

- 13.1. Event types

- 14. Atom feed

- 15. Analytics module

- 16. Workflows and chaining

- 17. The OGC Application Package

- 18. Execution Management Service (EMS)

- 19. Application Deployment and Execution Service (ADES)

- 20. Quotation and billing

- 21. TEP Marketplace Web Client

- 22. Storage layer

- Annex A: Revision History

- Annex B: Bibliography

i. Abstract

The traditional approach for exploiting data is:

-

User develops one or more applications to process data.

-

The data of interest is copied to the user’s environment.

-

The data is processes in the user’s environment.

-

If the data is too voluminous, the data is segmented into smaller chuncks each of which is copied to the user’s environment for processing.

This results in the data being transferred many times and replicated in many places which in-turn introduces issues of scalability and currency of the data.

The exploitation platform (EP) concept endeavours to turn this traditional approach on its head. The fundamental principal of the EP concept is to move the user’s application to the data.

As opposed to downloading, replicating, and exploiting data in their local environment, in the EP approach users access a platform. The platform provides a collaborative, virtual work environment that offers:

-

Relevant data; in many cases the data is centred around a theme (e.g. forestry, hydrology, geohazards, etc.) and so the term "thematic exploitation platform" is introduced.

-

An application repository or "app store" (Software as a Service, SaaS) providing access to relevant advanced processing applications (e.g. SAR processors, imagery toolboxes)

-

The ability for users to upload their own applications (and data) to the platform.

-

Scalable network, computing resources and hosted processing (Infrastructure as a Service - IaaS)

-

A platform environment (Platform as a Service - PaaS), that allows users to:

-

to discover the resources offered by the platform

-

integrate, test, run, and manage applications without the complexity of building and maintaining their own infrastructure

-

provides access to standard platform services and functions such as:

-

collaborative tools

-

data mining and visualization applications

-

relevant development tools (such as Python, IDL etc.)

-

communication tools (e.g. social network)

-

documentation

-

-

accounting and reporting tools to manage resource utilization.

-

The CubeWerx TEP, the design of which is described in this document, is implemented according to the following principles:

-

Implement standards – to ensure interoperability

-

Implement infrastructure independence – to ensure cost effective infrastructure sourcing, avoid vendor lock-in, and allow reuse of public and commercially available information and communications technology

-

Implement pay-per-use – to avoid capital investment, contain costs, and allow for cost-sharing

ii. Keywords

The following are keywords to be used by search engines and document catalogues.

ADES, application, exploitation, EMS, marketplace, OGC API, platform, thematic, TEP,

iii. Preface

Attention is drawn to the possibility that some of the elements of this document may be the subject of patent rights. CubeWerx Inc. shall not be held responsible for identifying any or all such patent rights.

Recipients of this document are requested to submit, with their comments, notification of any relevant patent claims or other intellectual property rights of which they may be aware that might be infringed by any implementation of the system design set forth in this document, and to provide supporting documentation.

iv. Security Considerations

A Web API is a powerful tool for sharing information and the analysis of resources. It also provides many avenues for unscrupulous users to attack those resources. A valuable resource is the Common Weakness Enumeration (CWE) registry at http://cwe.mitre.org/data/index.html. The CWE is organized around three views; Research, Architectural, and Development.

-

Research: facilitates research into weaknesses and can be leveraged to systematically identify theoretical gaps within CWE.

-

Architectural: organizes weaknesses according to common architectural security tactics. It is intended to assist architects in identifying potential mistakes that can be made when designing software.

-

Development: organizes weaknesses around concepts that are frequently used or encountered in software development.

CubeWerx Inc. has primarily focused on the Architectural and Development views as those most directly relate to the design and implementation of the TEP Marketplace.

Development view vulnerabilities primarily deal with the details of software design and implementation.

Architectural view vulnerabilities primarily deal with the design of an API. However, there are critical vulnerabilities described in the Development view which are also relevant to API design. Vulnerabilities described under the following categories are particularly important:

-

Pathname Traversal and Equivalence Errors,

-

Channel and Path Errors, and

-

Web Problems.

Many of the vulnerabilities described in the CWE are introduced through the HTTP protocol which addresses many of these vulnerabilities in the "Security Considerations" sections of the IETF RFCs 7230 to 7235.

The following sections describe some of the most serious vulnerabilities which CubeWerx has endeavoured to mitigate. These are high-level generalizations of the more detailed vulnerabilities described in the CWE.

Multiple Access Routes

The TEP Marketplace APIs deliver a representation of a resource. The APIs can deliver multiple representations (formats) of the same resource. An attacker may find that information which is prohibited in one representation can be accessed through another. CubeWerx has taken care that the access controls on resources are implemented consistently across all representations. That does not mean that they have to be the same. For example, consider the following.

HTML vs. GeoTIFF – The HTML representation may consist of a text description of the resource accompanied by a thumbnail image. This has less information than the GeoTIFF representation and may be subject to more liberal access policies.

Data Centric Security – techniques to embed access controls into the representation itself. A GeoTIFF with Data Centric Security would have more liberal access policies than a GeoTIFF without.

Bottom Line: CubeWerx has taken care to ensure that the information content of the resources exposed by the TEP Marketplace API are protected to the same level across all access routes.

Multiple Servers

The implementation of an API may span a number of servers. Each server is an entry point into the API. Without careful management, information which is not accessible though one server may be accessible through another.

Bottom Line: CubeWerx has taken care to ensure that information is properly protected along all access paths.

Path Manipulation on GET

RFC-2626 section 15.2 states “If an HTTP server translates HTTP URIs directly into file system calls, the server MUST take special care not to serve files that were not intended to be delivered to HTTP clients.” The threat is that an attacker could use the HTTP path to access sensitive data, such as password files, which could be used to further subvert the server.

Bottom Line: CubeWerx has taken care to validate all GET URLs to make sure they are not trying to access resources they should not have access to.

Path Manipulation on PUT and POST

A transaction operation adds new or updates existing resources on the API. This capability provides a whole new set of tools to an attacker.

Many of the resources exposed though an OGC API include hyperlinks to other resources. API clients follow these hyperlinks to access new resources or alternate representations of a resource. Once a client authenticates to an API, they tend to trust the data returned by that API. However, a resource posted by an attacker could contain hyperlinks which contain an attack. For example, the link to an alternate representation could require the client to re-authenticate prior to passing them on to the original destination. The client sees the representation they asked for and the attacker collects the clients’ authentication credentials.

Bottom Line: CubeWerx has taken care to ensure that transaction operations are validated and that updates do not contain any malignant content prior to exposing it through the TEP Marketplace API.

1. Scope

This document describe the design of the CubeWerx TEP Marketplace. The document contains the following sections:

-

Scenarios: Describe the primary user scenarios that the TEP must satisfy.

-

Summary of requirements: Presents a summary of the requirements for the TEP marketplace.

-

System architecture overview: Presents the architecture of the system and describes the component of the system.

-

Security and access control: Describes the authentication and access control module. As the name suggested this modules allows TEP users to log onto the system and use resource offered by the TEP.

-

Workspace manager: Describes the workspace module which implement a virtual work environment for TEP users. All the resources a TEP user owns or has access to are encapsulated within their workspace.

-

Catalogue: Describes the catalogue of the TEP. The TEP catalogue contains metadata about all the resources of the TEP and the associations between those resources. The catalogue offers a search API to make those resources discoverable.

-

Jobs manager: Describes the jobs module which defines an API for managing jobs that are implicitly created whenever any processing is performed on the TEP. The API allows users to get a list of jobs, the status of a specific jobs and the results of processing once a job has completed.

-

License manager: Every resource offered by the TEP is subject to license requirements. The license manager is responsible to keeps track of all the licenses and enforcing license requirements whenever a TEP resource is accessed.

-

Notification and analytics module: Describes how users can subscribe to receive notification of events of interest that occur on the TEP. The TEP maintains an event log which is the basis of a subscription and notification system. The event log is also the primary data source for the analytics tools offered by the TEP.

-

OGC Application Package: Describes how an application package is used to deploy application to the TEP. An application package is a JSON document that defines the inputs and output of an application, specifies the execution unit of the application and includes metadata about the resource requirements to run the application.

-

Workflows and chaining: Describes how applications deployed on the TEP can be assembled into a larger sequence of tasks that process a set of data. Workflows can be executed in an ad-hoc manner or be parameterized and deployed to the TEP as new processes.

-

Execution Management Service: Describes the EMS which is the central hub of the TEP. The EMS interacts with and coordinates the operation of all the other components of the TEP to perform the functions of the TEP.

-

Application Deployment and Execution Service: Describes the ADES which is responsible for running the execution unit of a TEP process using the resources of the underlying cloud computing environment. The ADES is also responsible for making the results of processing available to the entity executing a process.

-

Quotation and billing: Describes how a user can get a cost estimate for executing a process on the TEP for a specific set of inputs. Once an application has been executed, this module is responsible for billing the costs of the run to the initiating TEP account.

-

TEP Marketplace Web Client: Describes the web front end of the TEP.

-

Parallel execution manager: Describes how the ADES analyzes and execute request to determine the extent to which that execution can be parallelized.

-

Results manager: Describes how the ADES make processing results available to the initiating entity.

-

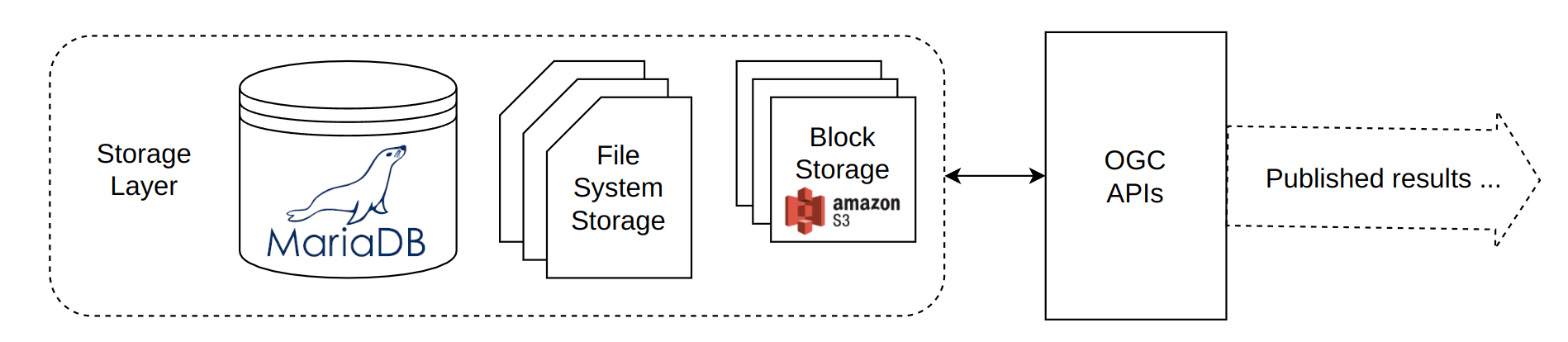

Storage layer: Describe how data, TEP thematic data, user data and processing results are stored in the TEP. This is via a combination of database, file system storage and cloud object or block storage (e.g. S3).

2. References

The following normative documents are referenced in this document.

3. Terms and Definitions

3.1. Acronyms

ADES - Application Deployment and Execution Service

AMC - Application Management Client

API - Application Programming Interface

APP - User-define application

COTS - Commercial Off The Shelf

CRUD - Create, Replace, Update, Delete

EMS - Execution Management Service

IdP - Identity Provider

MOAW - Modular OGC API Workflows

OAProc - OGC API Processes API

OGC - Open Geospatial Consortium

STS - Security Token Service

TEP - Thematic Exploitation Platform

3.2. Terms

- area of interest

-

An Area of Interest is a geographic area that is significant to a user.

- application package

-

A Context Document is a document describing the set of services and their configuration, and ancillary information (area of interest etc.) that defines the information representation of a common operating picture.

- Dockerfile

-

A text document that contains all the commands a user could call on the command line to assemble a software image.

- container

-

A lightweight and configurable virtual machine with a simulated version of an operating system and its hardware, which allow software developers to share their computational environments.

- CRUD

-

An acronym for Create, Replace, Update and Delete which are the data manipulation operations supported by RESTful APIs using the POST, PUT, PATCH and DELETE methods defined in HTTP.

- infrastructure as a service (IaaS)

-

A standardized, highly automated offering, where compute resources, complemented by storage and networking capabilities are owned and hosted by a service provider and offered to customers on-demand. Customers are typically able to self-provision this infrastructure, using a Web-based graphical user interface that serves as an IT operations management console for the overall environment. Application Programming Interface (API) access to the infrastructure may also be offered as an option.

- resource

-

A resource is a configured set of information that is uniquely identifiable to a user. Can be realized as in-line content or by one or more configured web services.

- TEP-holdings

-

The primary dataset(s) offered by the TEP. For example RCM data or Sentinel-1 iamgery products.

- user’s TEP workspace data

-

Uploaded data and processing results generated by the user and stored in their workspace.

- virtual machine image

-

A virtual machine image is a template for creating new instances. An image can be plain operating systems or can have software installed on them, such as databases, application servers, or other applications.

- workspace

-

A virtual environment that manages all the resources and assets owned by a specific user.

- thematic exploitation platform (TEP)

-

A "Thematic Exploitation Platform" refers to an environment providing a user community interested in a common Theme with a set of services such as very fast access to (i) large volume of data (EO/non-space data), (ii) computing resources (iii) processing software (e.g. toolboxes, retrieval baselines, visualization routines), and (iv) general platform capabilities (e.g. user management and access control, accounting, information portal, collaborative tools, social networks etc.). The platforms thus provide a complete work environment for its' users, enabling them to effectively perform data-intensive research and data exploitation by running dedicated processing software close to the data.

5. Scenarios

5.1. App Developer Scenario

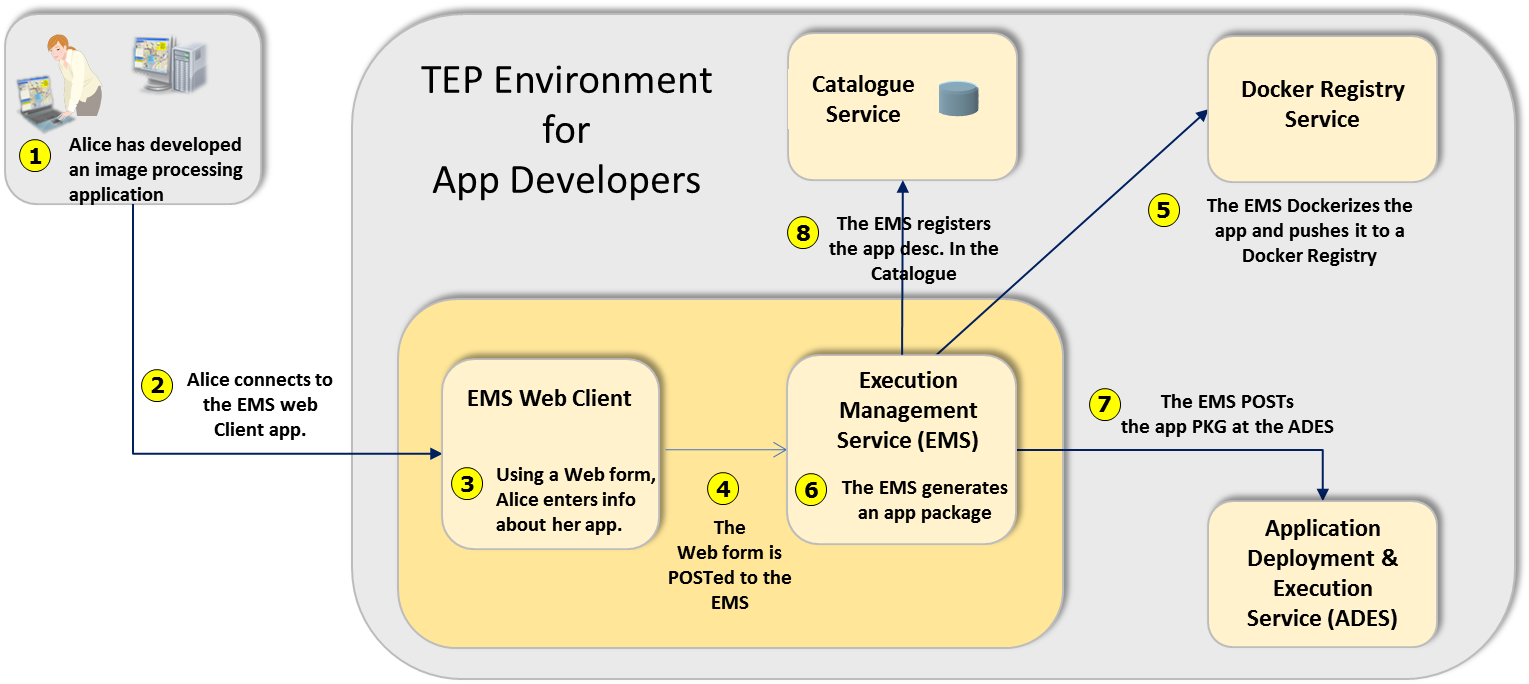

The app developer is a user who have created an application in their local environment and now want to deploy that application to the TEP marketplace. The following figure illustrates, at a high level, the basis steps taken by the user and the TEP marketplace to deploy the application to the ADES and make it available for execution. Initially the application is only available to the user for execution but eventually, the user can submit the application for public deployment at which point other user will be able to discover and executed the application within the TEP environment.

Figure - App Developer Scenario

Figure - App Developer Scenario

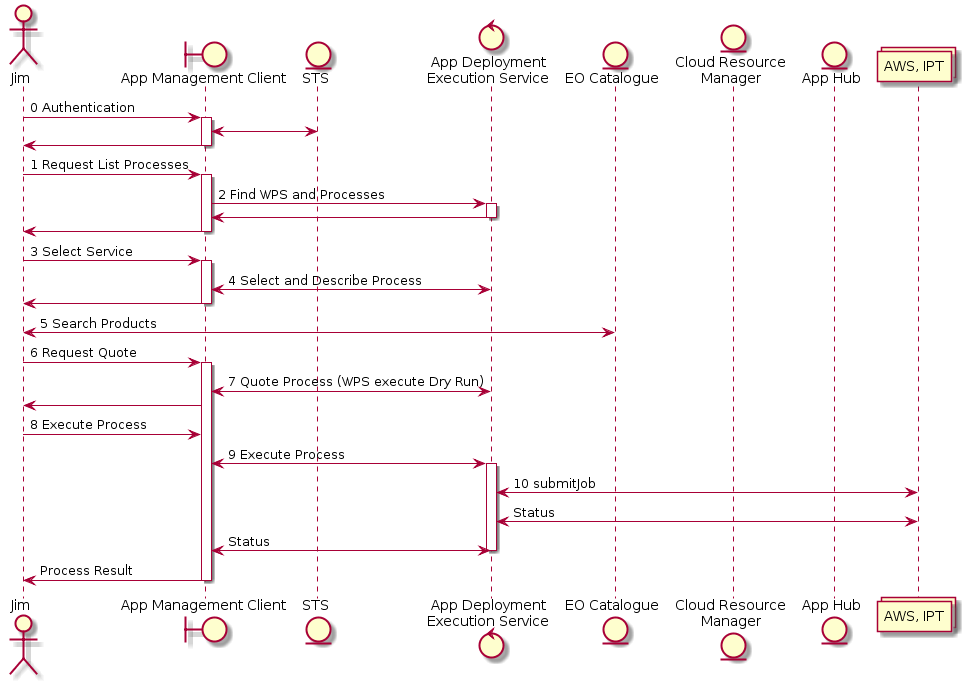

5.2. App User Scenario

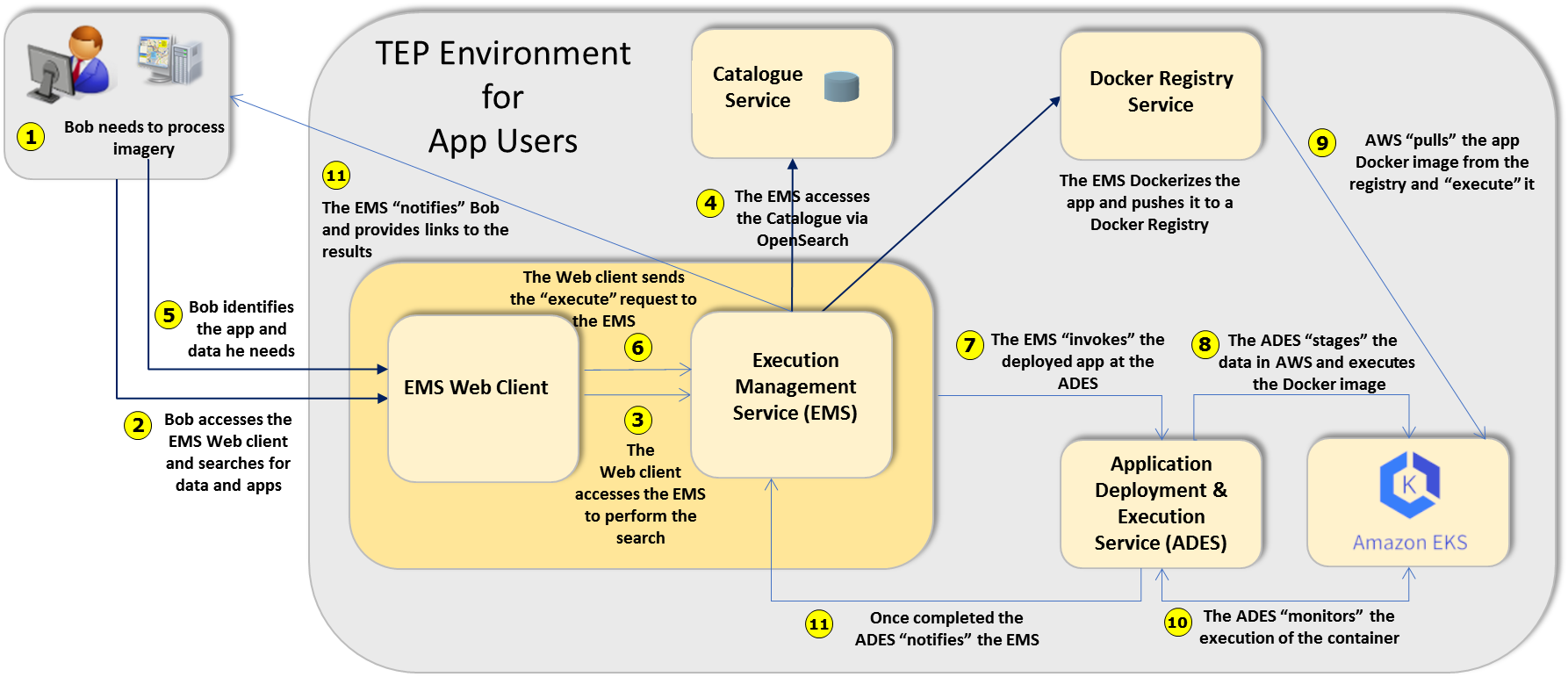

For a typical TEP user, interaction with the system begins by logging into the TEP marketplace and performing a search for data of interest and/or applications of interest. Since associations are maintained in the catalogue between TEP resources find one (say data) leads to discovering the other (i.e. applications suitable to operate on that data). Having discovered data and application of interest, the user can then proceed to execute the application. This following figure illustrates, at a high level, this workflow.

Figure - App User Scenario

Figure - App User Scenario

6. Requirements summary

6.1. Overview

In simple terms, the basic requirement of the TEP marketplace is to enable data processing algorithms uploaded by developers to be run by users, at scale, on very large spatial and non-spatial datasets hosted on the local cloud infrastructure.

-

Developers must be able to package their applications in containers, upload and register them with the system, for discovery by other users.

-

Users must be able to search an online catalogue of available applications and data, and construct job requests that will run their chosen application on the selected subset of data.

-

Results should be delivered either as a downloadable imagery, or via Web services, such as OGC Web Map, Web Map Tile, or Web Coverage services.

All interaction with applications, data and services must be protected by a role-based, spatially-aware authentication and access control system.

6.2. Web front end

The web front-end is the primary entry point for the TEP. The web front-end must satisfy the following requirements:

-

Developer interface

-

Provide web interface to develop, upload and deploy applications.

-

-

User interface

-

Provide web interface to allow TEP exploitation users to:

-

Discover TEP resources (i.e. processes and data)

-

Get quotation for executing process on data

-

Submit execute request to the system

-

Schedule execute request to the system (perhaps periodic)

-

Execute GUI apps (e.g. Jupyter notebooks, QGIS, etc.)

-

AWS Workspaces / AWS AppStream 2.0

-

-

-

6.3. Authentication & Access Control

All aspect of the TEP must be governed by authentication and access control. TEP users must be authenticated in order to access the TEP. Once authenticated, access control must be applied to control what resources the user can access.

The authentication and access control requirements for the TEP include:

-

Single sign-on

-

Role-based access control to TEP resources

-

Spatially aware access control (i.e. restrict or enable access and processing based on spatial constraints)

-

Federated access control allowing access to resources on another federated TEP

6.4. User Workspace Management

Having logged into a TEP, the user is placed in a virtual environment called a "workspace". All the resources of the TEP that the user owns or has access to are available in their workspace. This includes:

-

Apps the user has uploaded or purchased

-

Data the user has uploaded, purchased or has been granted access to

-

Processing results

-

Version control

-

Save and resume

6.5. Job manager

Each processes executed on a TEP will, either explicitly or implicitly, create a job that can be used to:

-

monitor the status of a job (e.g. submitted, running, completed, etc.)

-

access the results of a job

-

cancel a job

-

delete a job (and associated resources)

-

schedule job execution

-

periodic execution or re-execution

-

triggered execution

-

6.6. License manager

It is anticipated that many resources available on a TEP will have associated with then a license. The function of the license manager is to:

-

manage licenses for TEP resource (data, applications, etc.)

-

check license requirement each time a resource is accessed

-

if necessary, solicit license agreements (i.e. click through license) before a resource is accessed

6.7. Notification and analytics

The TEP is a collaborative environment where "events" are constantly occurring. The notification and analytics module allows these events to be logged and that log, in turn, to be used as the basis of a notification system and analytics. The requirements for the notification and analytics module include:

6.7.1. Notifications

-

Notification can be explicitly requested (i.e. via subscription) or they can be implicit (i.e. the system will notify you regardless such as when you exceed a quota or when your processing results are ready)

-

For personal stuff there is a question about whether notifications need to be sent via email or sms, or whether they just you into your notification feed.

-

-

This distinction might be, when its your own stuff in your workspace you automatically get notified; if, however, it is something outside your workspace, it would be op-in and you need to subscribe to get notified.

-

TEP-holdings = data that the TEP offers that is not 'BYOD' data in a user’s workspace

-

Like the GSS, a subscription includes an notification type. Usually that is an email notification but is can also be more general; something like execute this process so this allows processes triggering.

-

For each notification type we need to also define the context-sensitive content of the notification. For example, an SMS notification would be a one-liner while an email notification would include more content and a machine-readable notification would be some kind of JSON document.

-

Potential notification types:

-

email

-

sms

-

webhook

-

process execution

-

feed or notification log

-

-

For the notifications listed bellow we need to fill in:

-

What bits of information are required for each event

-

There will be a set of properties that are common to all events (id, title, timestamp, etc.)

-

There will also be a set of "custom" properties specific to each event type.

-

-

-

Types of notifications

-

User Quota (i.e. the amount of space they can consume in their workspace)

-

when user’s workspace quota is getting close to being exceeded

-

when user’s workspace quota has been exceeded

-

-

ADES events

-

let user know when an application/process is added/modified/deleted on the ADES

-

notify user when processing results are ready

-

notify user when process execution has failed

-

-

TEP-holdings

-

notification when data is added/modified/deleted

-

-

Web services

-

notification when new web service is available

-

notification when new content is added to a web service

-

-

-

Offers a subscription API that supports:

-

Subscribe operation

-

Unsubscribe operation

-

Renew operation (might no be necessary)

-

GetSubscriptions operation

-

Pause subscription operation

-

Resume subscription operation

-

DoNotification operation

-

6.7.2. Analytics

-

Offers and analytics module that can be used (for example) to:

-

Execution analytics

-

What app was executed

-

When the app was executed

-

The specific execute request

-

Who executed it

-

Timing statistics

-

success/failure

-

reference to results

-

Using this set of information all kinds of analytics can be determined

-

-

Create a heatmap of the data usage

-

NOTES: * we envision functions "start_execution", "process_progress" and "finish_execution" to register the fact that a process has started executing, is in the middle of executing and has completed execution * we also envision a function to get this information * all this is probably done within the ADES; the ADES API includes the GetStatus operation that can externalize this information

6.8. Execution and Management service

The execution and management service (EMS) is the primary hub of the TEP marketplace. The EMS interacts with all the other TEP components to enable and coordinate the functionality of the TEP. The functions of the EMS include:

-

Dynamically generate the web front end of the TEP

-

Coordinate app execution with the ADES

-

Act as a coordinate node in federated app execution especially as related to processing workflows

-

Implements the "app store" functionality of the TEP

-

Offer an "app store" API; ideally something similar to the Google or Apple app stores so that developers are presented with a familiar environment for deploying their apps.

-

|

Note

|

Developers should not be able to "deploy" their applications directly to the TEP. Rather, they should "submit" their applications to the TEP and then, after some validation process, the application becomes available for use on the system. |

6.9. Catalogue

The TEP shall include a central catalogue that:

-

Maintains metadata about all TEP resources

-

Maintains associations between TEP resources (e.g. application "A" can operate on data offerings "B" and "C")

-

Offers a search API to make TEP resources discoverable

6.10. Application execution (ADES)

The ADES is responsible for executing user-defined app in the underlying cloud computing environment. As such the ADES must:

-

Deploy user-defined app and make them available through the ADES' API

-

Support workflows and chaining of deployed applications

-

Support delegation (i.e. ability to execute a process on another TEP)

In order to deploy applications, an ADES must accept an "application package" which is a JSON document that:

-

defines the inputs the application accepts

-

defines the output the application generated

-

defines or provides a reference to the execution unit of the application (i.e. the executable code of the application)

The execution unit can be a binary executables bundled in a container (e.g. Docker container) but it can also be code written in some interpreted language such as Python or R.

6.11. Cost estimation and billing (ADES)

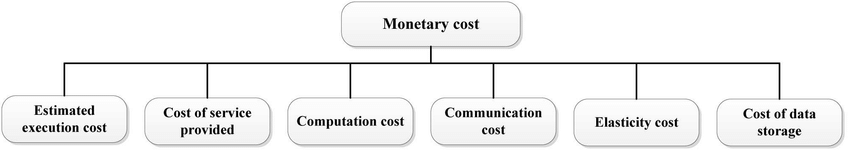

User-defined app executed on a TEP run in a cloud computing environment and as such consume chargeable resources. As a result, users will want to be able to get a cost estimate of executing an application on a specified set of inputs. The cost estimation module must:

-

analyze the description of the processes and the inputs specified in a execute request and generate a quotation for this execute request

-

the quotation can include alternative quotations based on different cloud computing resource utilization profiles (e.g. more cloud computing resources leading to shorter execution time and being more expensive versus fewer cloud computing resources leading to longer execution time but being less expensive)

6.12. Parallel execution module (ADES)

The function of the parallel execution module is to analyzes an execute request and the process description of a deployed user-defined app and determine the extent to which the execute request can be parallelized. For example, consider an execute request that references N scenes of satellite imagery as input. If the ADES determines that the N scenes can be processed independently, then the ADES could decide to group the input scenes into M batches and then execute the batches in parallel.

6.13. Results manager (ADES)

The result of executing an app is some output. The function of the result manager is to present the results as requested in the execute request. Results can be:

-

presented in the body of the response

-

stored in persistent storage for repeated retrieval

-

deployed as an OGC API so that the results can be accesses using any OGC API client

Results deployed as an OGC API can be updated and added to via subsequent runs of the process of other data. Subsequent process runs can be ad-hoc but also scheduled and periodic.

6.14. Storage layer

The storage layer is the repository of data in the TEP. The storage layer:

-

stores all "thematic" data offered by the TEP (e.g. image archive of RCM data),

-

stores all user uploaded data,

-

stores all processing results.

Data in the storage layer can be stored to:

-

a database (e.g. RDBMS),

-

file system storage (e.g. for source image for coverages),

-

block or object storage (e.g. S3).

Data in the storage layer can be accessed:

-

directly from the specific storage method

-

via the OGC API for results published as an OGC API deployment

6.15. Federation

Assuming that a trust agreement exists between two TEPs one TEP should be able to call a process on another TEP and retrieve the results of that processing.

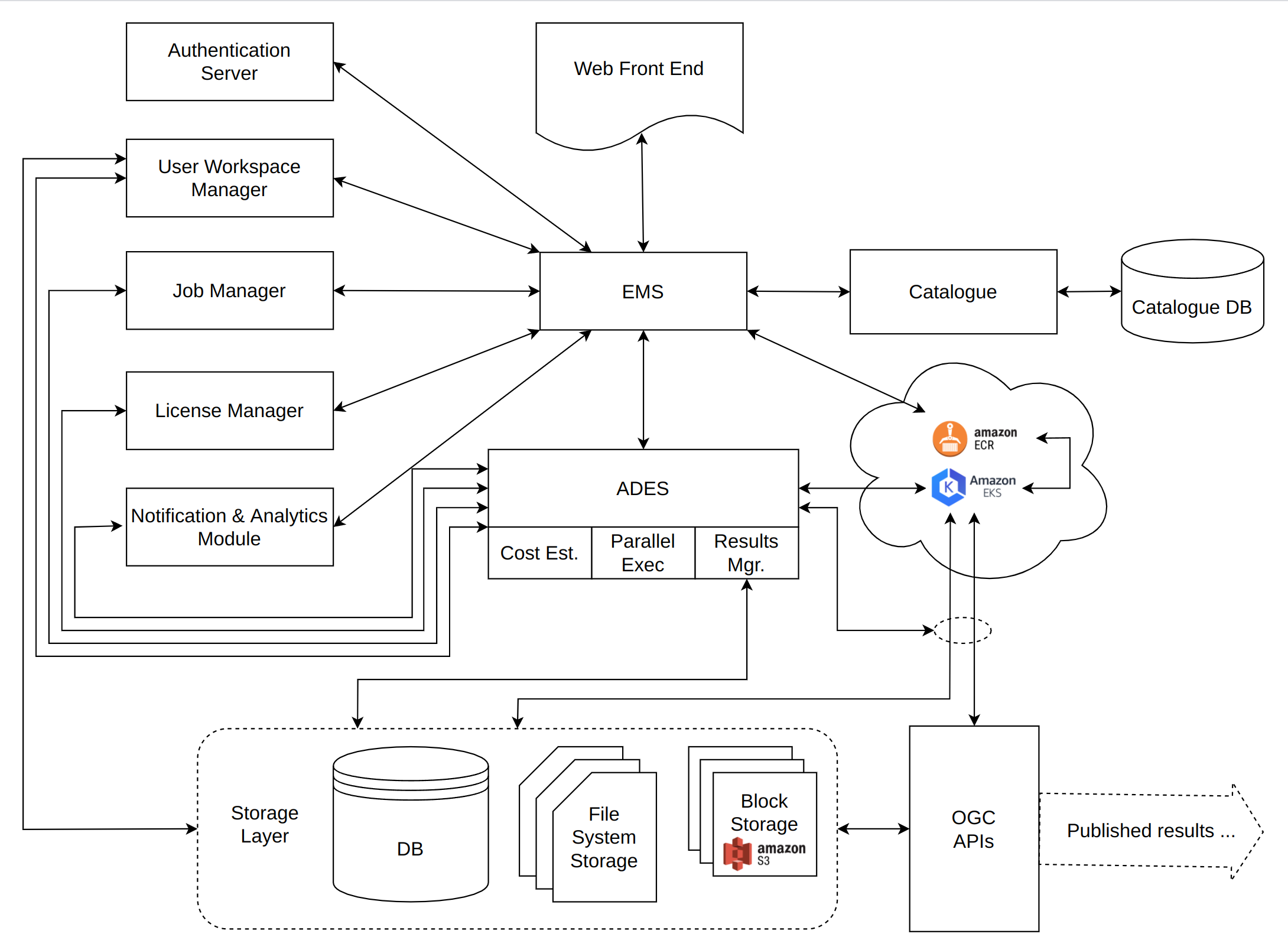

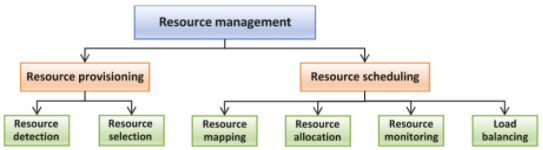

7. System Architecture Overview

The main functional components of the TEP marketplace architecture are presented below. Their interactions with each other are detailed in the Scenarios clause.

Figure 1 - TEP Architecture

Figure 1 - TEP Architecture

7.1. Web front end

The web from end is the primary entry point for users of the TEP. It is generated by and interacts with the Execution Management Service. It provides interfaces for both app developers and app consumers. It allows developers to upload and register their applications with the system and allows consumers to search for applications and data, execute those applications, access and publish the results.

7.2. Execution Management Service (EMS)

The EMS could fairly be considered the “hub” of the system. It acts as the back end for the Web Client. As such, it manages all interactions between the user and other parts of the system.

It is responsible for:

-

authenticating TEP users

-

enforcing access control rules to TEP resources

-

interfacing with the job manager to monitor job execution and report progress

-

interfacing with the license manager to enforce licensing rules

-

interfacing with the pub/sub manager to receive and trigger notifications

-

creating and managing application packages from uploaded user apps

-

containerizing apps and pushing them to the container registry

-

registering apps with the catalogue and establishing associations between the app and the data it can operate on

-

conducting catalogue searches on behalf of the user, both for apps and data

-

interfacing with the Application Deployment and Execution Service (ADES) to execute apps

7.3. Authentication server

This service provides secure access and rule-based access control to TEP resources. It also provides user management capabilities (i.e. creating users, password recovery, user roles, api keys, etc.)

7.4. User workspace manager

Each TEP user is provided with a workspace. A workspace is a virtual environment that contains all the resources that a TEP user owns or has access to. The workspace manager module implements this virtual environment.

7.5. Job manager

The job manager, manages the lifecycle of jobs created on the TEP. It allows jobs to be monitored, cancelled, deleted, etc. It also allows results to be associated with a job and retrieved once a job is completed.

7.6. License manager

The license manager is responsible for maintaining and enforcing licensing requirements for TEP resources. When necessary, the license manager will (for example) trigger a click-through license to access licensed resources on the TEP.

7.7. The Notification & Analytics module

The Notification & Analytics allow TEP users to subscribe to and receive notifications about various events that can occur in the TEP. For example, the queuing of a job for execution, the completion of the job, exception events, additions of new data to the TEP, etc.

The module also provides analytic tools to view and analyze the event data.

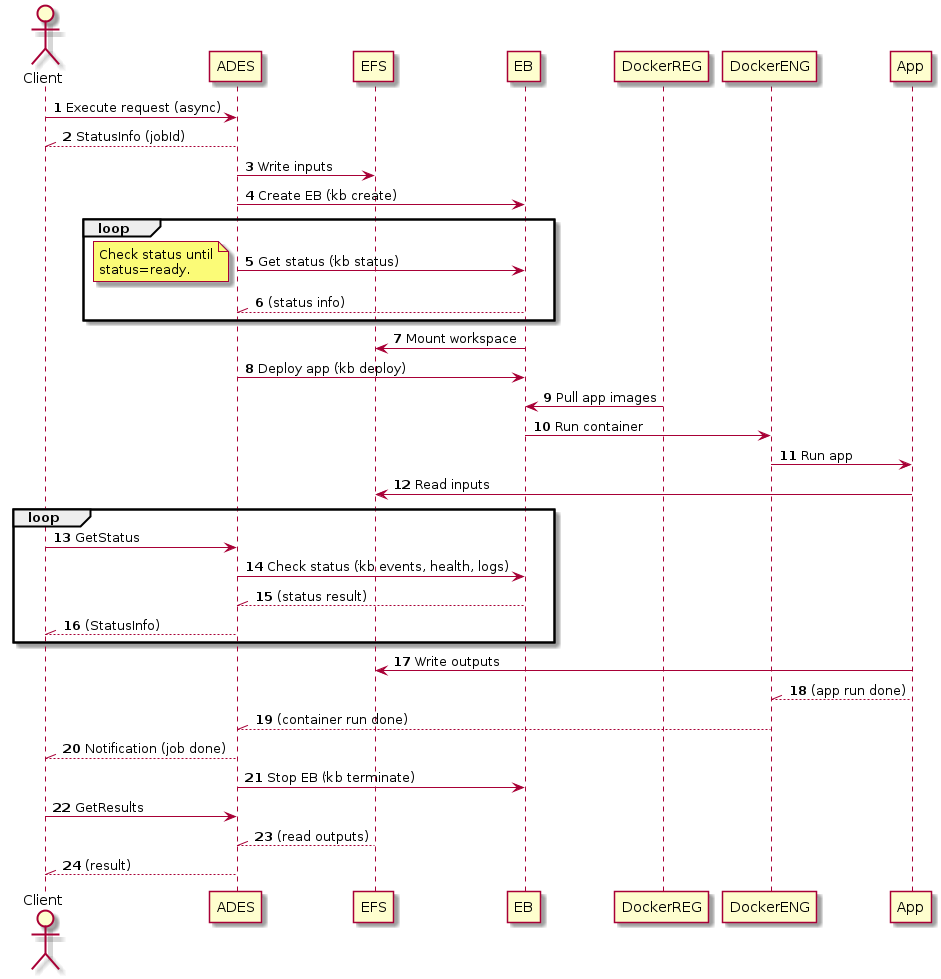

7.8. Application Deployment and Execution Service (ADES)

As its name implies, the ADES is responsible for deploying and executing the app developers’ application packages in the cloud provider’s systems. It interfaces with the cloud provider’s proprietary system for executing Docker containers and manages their execution and monitoring. It is worthy of note that this communication comprises the only cloud-provider-specific interface of the entire architecture. All other components would be identical, regardless which cloud provider is used.

The ADES also interacts with the other modules of the TEP to:

-

store processing results in a user’s workspace

-

this can be storing the results as file, in block storage or even publishing the results as an OGC API

-

-

to communicate the progress of jobs to the job manager

-

to ensure that licensing requirements are satisfied before executing a process

-

to trigger notifications of ADES events

7.9. Catalogue service

The catalogue is a central, searchable repository for both data and applications. It is an OGC-Compliant Service that will serve both app developer and consumer requirements through the EMS.

7.10. Docker registry

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package. By doing so, thanks to the container, the developer can rest assured that the application will run on any other machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

A Docker image is a light-weight virtual machine. But unlike a virtual machine, rather than creating a entire virtual operating system, Docker allows applications to use the same kernel as the system that they’re running on and only requires applications be shipped with things not already running on the host computer. This gives a significant performance boost and reduces the size of the application.

Applications in the TEP marketplace will be packaged into Docker containers for execution by the cloud service provider’s system. The Docker registry is a managed repository of Docker containers with API access both for uploading packages (through the EMS) and for accessing and execution them (by the cloud service provider’s execution service).

7.11. Elastic Kubernetes Service (EKS) Container Execution Service

The TEP uses Amazon’s Elastic Kubernetes Service (EKS) to simplify the Docker execution environment and provide scalability for large jobs.

EKS is described by Amazon as “a compute engine that lets you run containers at scale on AWS infrastructure without having to manage clusters or individual virtual servers – This removes the need to choose server types, decide when to scale your clusters, or optimize cluster packing. EKS removes the need for customers to interact with or think about servers or clusters. EKS lets you focus on designing and building your applications instead of managing the infrastructure that runs them.”

Containers running in EKS access TEP data via the storage layer either directly or mediated by the ADES.

7.12. Storage layer

The storage layer is where TEP data is stored. TEP data includes the thematic data that the TEP offers, data uploaded into user workspaces and processing results.

TEP data can be stored in:

-

a MariaDB database,

-

in filesystem storage,

-

or in S3 block or object storage.

Apps deployed on the TEP can access TEP data directly (i.e. the app knows, for example, how to read data from a S3 bucket) or mediated by the ADES (i.e. the ADES stages the data for the application in a form that the application can read).

TEP data case be accesses directly for storage or it can be accesses via an OGC API.

8. Security and Access Control

8.1. Overview

CubeWerx is building some components of the TEP architecture by also extending and enhancing our suite of standards-compliant geospatial web services. Over the past 10 years, we have built out a sophisticated infrastructure for securing such services, based around a cryptographically secure identity token, and “spatially-aware” web services that can use this token to enforce access control.

The Security and access control component is presented in some detail, since 3rd party applications may need to use these authentication methods to access services generated by the TEP.

8.2. Identity Provider Token Service

A sign-in request to the TEP authentication server will return a secure token encoded (in the case of Web applications) in the form of an HTTP cookie called CW_CREDENTIALS. Encoded within the value of this cookie is a small JSON document containing an authenticated set of credentials. These credentials are packaged in an OpenPGP message (RFC 4880) and are signed with the PGP private key of a CubeWerx Authentication Server that is set up to provide authentication for the TEP. All other servers within the TEP are equipped with the public key(s) of the trusted authentication server(s) of that domain. This allows the servers to verify the authenticity of the credentials, since a successful decryption with the public key of one of the trusted authentication servers implies that the credentials must have originated from that authentication server. Note, therefore, that it is not necessary for a server to contact a CubeWerx Authentication Server in order to decrypt or verify the authenticity of a set of credentials.

A user is authenticated by making a login request to a CubeWerx Authentication Server. This can be done directly by having the user visit the CubeWerx Authentication Server with his/her browser, at which point the user will be prompted for a username and password. The authentication server will then pass a CW_CREDENTIALS cookie containing the appropriate credentials back to the browser. Alternatively, a browser-based application can collect the user’s username and password and contact the authentication server on behalf of the user (via either an Ajax request or a redirection to the authentication server with a callback back to the application). Either way, the web browser receives a CW_CREDENTIALS cookie containing the user’s authenticated credentials for that domain.

Since the CwAuth credentials for a particular domain are communicated via an HTTP cookie, web browsers (and thus browser-based applications) will automatically pass along such credentials when communicating with servers within that domain. Furthermore, properly equipped servers can cascade such credentials to other servers further down the service chain by recognizing the CW_CREDENTIALS cookie in the request and passing it along in the HTTP headers of any requests that it makes.

A web service can determine the credentials of the current user in one of two ways:

-

By making a getCredentials request to a CubeWerx Authentication Server and parsing the unencrypted credentials that are returned the response.

-

By decrypting the CW_CREDENTIALS cookie itself with the public key of a known (and trusted) CubeWerx Authentication Server.

A decrypted CW_CREDENTIALS cookie contains an JSON representation of the user’s credentials, and has the following form:

{

"domain": "xyzcorp.com",

"firstName": "John",

"lastName": "Doe",

"eMailAddress": "jdoe@xyzcorp.com",

"username": "jdoe",

"roles": [ "User", "Administrator" ],

"authTime": "2018-04-18T20:19:32.167Z",

"expires": "2018-04-19T08:19:32.167Z",

"loginId": "53vQyeTY",

"hasMaxSeats": true,

"originServer": "https://xyzcorp.com/cubewerx/cwauth"

}All of the properties of this credentials object other than domain and username are optional. If the value of the hasMaxSeats property is false, it’s usually omitted. The originServer property indicates the URL of the CubeWerx Authentication Server that authenticated the credentials.

8.2.1. Authentication Scenarios

There are several situations within the TEP where authentication may be required.

Web-based application authenticating via Ajax request

-

The user clicks a "log in"/"sign in" button on the web-based application.

-

The web-based application prompts the user for a username and password.

-

The web-based application makes an Ajax "login" request to the configured CubeWerx Authentication Server, passing along the user’s username and password.

-

The CubeWerx Authentication Server authenticates the user for the current domain and prepares a set of credentials which it encrypts using a private key and packages into a CW_CREDENTIALS cookie. This CW_CREDENTIALS cookie is then returned to the web-based application via the HTTP response headers. The HTTP response body contains an unencrypted version of the credentials in JSON format.

-

The web browser automatically absorbs the CW_CREDENTIALS cookie and will include it in all future HTTP requests to all servers in the domain that the credentials were authenticated for. The web-based application optionally parses the JSON response and greets the user.

Web-based application redirecting to authentication server

-

The user clicks a "log in"/"sign in" button on the web-based application.

-

The web-based application directs the web browser to the login page of the configured CubeWerx Authentication Server, including a callback URL (back to the appropriate state of the web-based application) as a parameter of the login page’s URL.

-

The CubeWerx Authentication Server prompts the user for a username and password.

-

The CubeWerx Authentication Server authenticates the user for the current domain and prepares a set of credentials which it encrypts using a private key and packages into a CW_CREDENTIALS cookie.

-

The CubeWerx Authentication Server sends a web page back to the user displaying the authenticated credentials. The HTTP response headers of this web page include the CW_CREDENTIALS cookie, which the web browser automatically absorbs.

-

The CubeWerx Authentication Server directs the web browser back to the web-based application, which then greets the user.

Server cascading credentials to another server

-

A server receives a request that includes a CW_CREDENTIALS cookie in its HTTP headers.

-

The server optionally decrypts the CW_CREDENTIALS cookie with the public key of an authentication server. This step is only necessary if this server itself needs to control access based on user identity or if the authenticated user needs to be identified for some other reason (such as for logging or for a personalized greeting message).

-

When making a cascaded request to another server, the server checks the domain of the other server. If it’s in the same second-level domain, it prepares a "Cookie" HTTP header containing a copy of the CW_CREDENTIALS cookie and passes that along with the cascaded request.

Desktop application authenticating via the HTTP Basic Authentication mechanism

-

The desktop application attempts to connect to CubeSERV via an "htcubeserv" URL and is challenged for an HTTP-Basic identity.

-

The user supplies a username and password, and the desktop application attempts the connection to CubeSERV again. (Or the application supplies a configured or cached username and password on the first request.)

-

The CubeSERV authenticates the HTTP-Basic identity and prepares CwAuth credentials for the user by logging into the authentication server on the user’s behalf.

-

The HTTP response includes a CW_CREDENTIALS cookie so that, if the application supports cookies, future requests can be made more efficiently.

8.3. Authentication Server

8.3.1. Overview

The Authentication Server operations can be invoked either via HTTP GET (supplying the parameters as part of the URL) or via HTTP POST (supplying the parameters in the body of the request with an application/x-www-form-urlencoded MIME type). The usage of HTTP POST is recommended for the "login" operation in order to prevent plaintext usernames and passwords from being visible in the URL. Responses that indicate a status in the response body will also report this status via a CwAuth-Status MIME header. If a request to the Authentication Server contains cross-origin resource sharing (CORS) headers, then the origin of the request must be in the same domain as the Authentication Server.

8.3.2. Operations

The Authentication Server supports several operations:

Login

Parameters

operation=login&username=<username>&password=<password>&format=XML|JSON|HTML[&callback=<callbackUrl>]

Usage

Authenticates the user for the current domain (i.e., the second-level domain name of the URL that the CubeWerx Authentication Server was invoked with) and logs him/her in by supplying the user agent (e.g., web browser) with a CW_CREDENTIALS cookie for that domain.

The usage of HTTP POST (supplying the parameters in the body of the request with an application/x-www-form-urlencoded MIME type) is recommended in order to prevent plaintext usernames and passwords from being visible in the URL.

Returns

If authentication is successful, a CW_CREDENTIALS cookie is returned in the response HTTP headers, and a "loginSuccessful" response in the requested format is returned in the response body. The response body includes unencrypted credentials.

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>loginSuccessful</Status>

<CwCredentials>

<Domain>xyzcorp.com</Domain>

<FirstName>John</FirstName>

<LastName>Doe</LastName>

<EMailAddress>jdoe@xyzcorp.com</EMailAddress>

<Username>jdoe</Username>

<Role>User</Role>

<Role>Administrator</Role>

<AuthTime>2018-04-18T20:19:32.167Z</AuthTime>

<Expires>2018-04-19T08:19:32.167Z</Expires>

<LoginId>53vQyeTY</LoginId>

<OriginServer>https://xyzcorp.com/cubewerx/cwauth</OriginServer>

</CwCredentials>

</CwAuthResponse>{

"status": "loginSuccessful",

"credentials": {

"domain": "xyzcorp.com",

"firstName": "John",

"lastName": "Doe",

"eMailAddress": "jdoe@xyzcorp.com",

"username": "jdoe",

"roles": [ "User", "Administrator" ],

"authTime": "2018-04-18T20:19:32.167Z",

"expires": "2018-04-19T08:19:32.167Z",

"loginId": "53vQyeTY",

"originServer": "https://xyzcorp.com/cubewerx/cwauth"

}

}HTML (if a callbackUrl is provided):

(an HTTP redirection to the specified callbackUrl)

HTML (if a callbackUrl is not provided):

(an HTML document showing the user’s new credentials)

If authentication is unsuccessful, a response that indicates a status of "loginFailed" is returned. If unsuccessful login requests are occurring too frequently for this user, the login request is rejected and a response that indicates a status of "loginAttemptsTooFrequent" is returned. This protects the CubeWerx Authentication Server against brute-force password-cracking attempts.

Logout

Usage

Logs the user out of the current domain (i.e., the second-level domain name of the URL that the CubeWerx Authentication Server was invoked with) by requesting that the user agent (e.g., web browser) expire the CW_CREDENTIALS cookie for that domain.

Returns

An empty CW_CREDENTIALS cookie with a cookie expiry time in the past is returned in the response HTTP headers, and a "logoutSuccessful" response in the requested format is returned in the response body.

XML Response:

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>logoutSuccessful</Status>

</CwAuthResponse>JSON Response:

{ "status": "logoutSuccessful" }HTML (if a callbackUrl is provided):

(an HTTP redirection to the specified callbackUrl)

HTML (if a callbackUrl is not provided):

(an HTML document indicating that the user has been logged out)

GetCredentials

Usage

Returns the user’s current credentials (i.e., the unencrypted contents of the user’s CW_CREDENTIALS cookie) for the current domain.

Returns

Either a "credentialsOkay", a "noCredentials", a "credentialsExpired" or a "credentialsInvalid" response in the requested format is returned in the response body. If "credentialsOkay" or "credentialsExpired", the response body includes unencrypted credentials. Note that a CW_CREDENTIALS cookie is not returned in the response HTTP headers, as this would be redundant. The "credentialsInvalid" response response is returned in situations where the user has credentials but they are not recognized by this authentication server.

XML Responses:

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>credentialsOkay</Status>

<CwCredentials>

<Domain>xyzcorp.com</Domain>

<FirstName>John</FirstName>

<LastName>Doe</LastName>

<EMailAddress>jdoe@xyzcorp.com</EMailAddress>

<Username>jdoe</Username>

<Role>User</Role>

<Role>Administrator</Role>

<AuthTime>2018-04-18T20:19:32.167Z</AuthTime>

<Expires>2018-04-19T08:19:32.167Z</Expires>

<LoginId>53vQyeTY</LoginId>

<OriginServer>https://xyzcorp.com/cubewerx/cwauth</OriginServer>

</CwCredentials>

</CwAuthResponse>or

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>noCredentials</Status>

</CwAuthResponse>or

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>credentialsExpired</Status>

<CwCredentials>

...

</CwCredentials>

</CwAuthResponse>or

<?xml version="1.0" encoding="UTF-8"?>

<CwAuthResponse>

<Status>credentialsInvalid</Status>

</CwAuthResponse>*JSON Responses: *

{

"status": "credentialsOkay",

"credentials": {

"domain": "xyzcorp.com",

"firstName": "John",

"lastName": "Doe",

"eMailAddress": "jdoe@xyzcorp.com",

"username": "jdoe",

"roles": [ "User", "Administrator" ],

"authTime": "2018-04-18T20:19:32.167Z",

"expires": "2018-04-19T08:19:32.167Z",

"loginId": "53vQyeTY",

"originServer": "https://xyzcorp.com/cubewerx/cwauth"

}

}or

{ "status": "noCredentials" }or

{

"status": "credentialsExpired",

"credentials": {

...

}

}or

{ "status": "credentialsInvalid" }HTML Response:

(an HTML document showing the user’s current credentials)

(if a callbackUrl is provided, then the HTML response document will include a button which allows the user to go to that URL, returning the user to the web application that invoked the getCredentials operation)

8.4. Access Control for TEP Components and Web Services

The access control mechanism to be deployed for the TEP and employed by the CubeSERV OGC Web Services is an internal software module built into all CubeWerx Web services that controls access to services, data and map layers in a number of ways, based on the identity of the user. It is configured as a set of rules (expressed as XML), where each rule has an appliesTo attribute indicating which user(s) or roles the rule applies to. An individual user may trigger more than one applicable rule, or none. If more than one rule is applicable, then the user is granted access to the union of what the applicable rules grant. Rules can be configured to expire after a certain time, allowing subscription times to be configured.

8.4.1. Identity Types

The following identity types can be specified:

-

IP address (or address range)

-

HTTP Basic identity

-

CubeWerx Identity Token (user, role or any)

-

OAuth Identity Token

-

OpenID Token

-

Everybody

8.4.2. Spatially Aware Access Control

Access control is implemented at the Web Service level in all TEP components, which enables each component to make intelligent decisions based on its individual domain “knowledge”.

For instance, based on a user’s identity, a map service can be instructed to allow access to certain map layers, or certain operations of the service, as well as restricting the request to certain geographical regions, represented by complex polygons if necessary, or limiting requests for high resolution data to lower resolution representations of that data. Images can be watermarked for certain roles or users if required.

This component is embedded in the Web Processing Servers that make up the TEP components, allow TEP administrators to set permissions and grant or deny access to various aspects of the system.

9. Workspace manager

Associated with each TEP user is a workspace. A workspace is a virtual environment where a user, depending on their role, can maintain and organize all their TEP resources. TEP resources include licenses, data, applications, jobs, etc. The workspace environment can also provide resource such as IDEs (e.g. Jupyter notebooks) for developing applications, etc.

The following sections describe the individual sub-components of the workspace manager.

9.1. TEP workspace

A TEP Marketplace user must have the ability to upload their own data. This area will be called "the user’s TEP workspace". To support this, the TEP Marketplace will have an S3 bucket, accessible only by the TEP Marketplace server, with the following directory structure:

users/

<username1>/

workspace/

...

jobs/

<username2>/

workspace/

...

jobs/

<username3>/

workspace/

...

jobs/

[...]Under a user’s workspace/ directory is a free-form directory structure of files that’s under the user’s complete control. It consists of:

-

all of the source data that the user uploads (e.g., GeoTIFF files, GeoJSON files, etc.), organized into whatever directory structure makes sense to the user

-

anything else that the TEP Marketplace apps need to operate

-

processing results (i.e., output of WPS), under a hardcoded

jobs/directory

The user should be presented with this directory as a complete filesystem through the TEP Marketplace, manipulatable in the standard filesystem ways. The only way the user can access this directory structure is through the TEP Marketplace (authenticated via CwAuth). There will be no direct access.

The reason for the extra workspace/ directory level is to allow the possibility of other TEP-managed directories to exist in S3 per username.

The user should be able to select one or more files or directories in their workspace and do things like download or delete the files. If the files and/or directories are data files, the user should also be given the option to expose them as a web service (see the Exposing workspace data as web services section). The user should also be able up upload new files and directories.

There will be per-user quotas on the size of a user’s workspace. The user should be given a visual indication of how full their workspace is (e.g., with a progress bar and/or "xGB of xGB used (x%)". We can determine the number of bytes taken up by an S3 directory with the command:

s3 size -recursive s3://{bucket}/{path}/9.2. Workflow management

The TEP workspace will use a MariaDB database (on the same database system where cw_auth exists) called cw_tep, which has the following table:

CREATE TABLE workflows (

username VARCHAR(255) NOT NULL,

title VARCHAR(255),

script LONGTEXT NOT NULL,

created_at TIMESTAMP NOT NULL DEFAULT '1970-01-02',

updated_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

ON UPDATE CURRENT_TIMESTAMP,

FOREIGN KEY (username) REFERENCES cw_auth.users (username)

ON DELETE CASCADE ON UPDATE CASCADE

) CHARACTER SET utf8 COLLATE utf8_bin;This table can be JOINed with the cw_auth.users table to determine the user’s full name and e-mail address, etc.

The TEP Marketplace GUI will have some sort of workflow editor, and will store the workflows in this table. The script will likely be CQL, so the GUI will need to know how to parse and generate CQL.

9.3. App management

App management within the TEP Marketplace is described in the License manager section of this document.

9.4. List of owned jobs

The user should be presented with a list of their current jobs (in whatever state they’re in - in progress, completed, etc.). This information can be provided to the TEP Marketplace GUI by the ADES and/or the jobs table (see section ???). If possible, a progress bar (with an estimated time to completion) should be presented for in-progress jobs.

9.5. Job results

Upon completion of a process, the results (and logs, etc.) will automatically be stored (by the WPS itself) in the user’s workspace under a jobs/{jobId}/ directory.

9.6. Exposing workspace data as web services

A user should be able to select any data file (e.g., a GeoTIFF or GeoJSON file) or directory (e.g., jobs/{jobId}/Output) in their workspace and expose it as a CubeSERV web service (which provides several OGC web service endpoints). The user should be given the option to "expose as new web service" or "add to existing web service". Perhaps there can also be a shortcut way of doing this upon completion of a job.

The TEP will expose a single CubeWerx Suite web deployment and use only the default instance. To expose a set of one or more data files through CubeSERV, it must be first loaded as a CubeSTOR MariaDB feature set. Each TEP user will be assigned exactly one CubeSTOR data store, whose name is the user’s username. When a TEP user is created, the following steps must be taken to support this:

-

Create a CubeSTOR MariaDB data store whose name is the user’s username.

-

Add the following entry to the namedDataStores.xml file:

<DataStore name="{username}">

<Type>mariadb</Type>

<Source>{username}</Source>

</DataStore>-

Add the following entry to the accessControlRules.xml file:

<Rule appliesTo="cwAuth{{username}}">

<Allow>

<Operation class="*"/>

<Content dataStore="{username}" name="*"/>

</Allow>

</Rule>Conversely, these things must be removed when the TEP user is deleted.

The steps to expose a set of one or more data files as a new web service are:

-

Create a new CubeSTOR MariaDB feature set in that user’s data store. The feature-set name should be the job ID, data filename, or data directory name, and the user should be prompted for a title.

-

Set up some sort of good default style for map rendering. (In a future version, the user could even be prompted for options here.)

-

Register the specified source file(s) as data sources of this feature set.

To add one or more data files to an existing web service, it’s simply a matter of registering the specified source file(s) as new data sources.

The TEP GUI will need to present the user with the list of their currently-exposed web services. This list can be derived simply by querying the user’s CubeSTOR data store for the list of feature sets that are loaded into it. From this list, the user should be able to:

-

Make the web service either public or private by adding or removing the following feature-set specific entry to/from

accessControlRules.xml:

<Rule appliesTo="everybody">

<Allow>

<Operation class="GetMap"/>

<Operation class="GetFeature"/>

<Operation class="GetFeatureInfo"/>

<Content dataStore="{username}" name="{featureSetName}"/>

</Allow>

</Rule>-

Remove the web service (by removing the feature set and removing the feature-set specific entries in accessControlRules.xml.

-

In the future, possibly re-title the web service (by retitling the underlying feature set), etc.

With this arrangement, the following web-service URLs will exist:

- A WMS serving {username}'s exposed feature sets

-

https://{wherever}/cubeserv?DATASTORE={username}&SERVICE=WMS

- A WMS serving all exposed feature sets of all TEP users

- A WMTS serving {username}'s exposed feature sets

-

https://{wherever}/cubeserv?DATASTORE={username}&SERVICE=WMTS

- A WMTS serving all exposed feature sets of all TEP users

- A WFS serving {username}'s exposed feature sets

-

https://{wherever}/cubeserv?DATASTORE={username}&SERVICE=WFS

- A WCS serving {username}'s exposed feature sets

-

https://{wherever}/cubeserv?DATASTORE={username}&SERVICE=WCS

- An OGC API landing page for {username}'s exposed feature sets

- An OGC API endpoint for one of {username}'s exposed feature sets

-

https://{wherever}/cubeserv/default/ogcApi/{username}/collections/{collectionId}

All of these URLs, except for the last one, will always exist for all TEP users. The last one is feature-set specific, so there will be zero or more of them per user.

9.7. Sharing

In the first version of the TEP Marketplace, it has been tentatively agreed that the only way to share data with others will be by exposing it as a web service, marking it as "public", and sharing the URL(s) to this web service.

In a future version, we can allow a TEP user to share arbitrary content in their workspace with other users. To accommodate this, we can add the following table to the cw_tep database:

CREATE TABLE access_control (

username VARCHAR(255) NOT NULL,

path VARCHAR(4096) NOT NULL,

grant_user VARCHAR(255),

grant_type VARCHAR(255) NOT NULL,

FOREIGN KEY (username) REFERENCES cw_auth.users (username)

ON DELETE CASCADE ON UPDATE CASCADE,

FOREIGN KEY (grant_user) REFERENCES cw_auth.users (username)

ON DELETE CASCADE ON UPDATE CASCADE

) CHARACTER SET utf8 COLLATE utf8_bin;username is the username of the TEP user granting access to something in their workspace. path is the path of the file or directory being shared, and is relative to the root of the user’s workspace. grant_user is the username of the TEP user who’s being granted access, or NULL to grant all users access. grant_type is the type of access granted. In the first implementation of this mechanism, this will probably be limited to "r" for read-only access.

This table can be JOINed with the cw_auth.users table to determine the user’s full name and e-mail address, etc.

A user should be able to grant or revoke access to arbitrary files and directories in their workspace by selecting the appropriate option in the file-or-directory-specific menu in their workspace view.

Each user’s workspace view will have a directory called shared/ (that is not renameable or removable) whose subdirectories are the usernames of users who have granted the user access to at least one thing. Under these subdirectories will be the files and directories of the things being shared. In this way the user can access (and refer to in process inputs) other user’s shared files in exactly the same way as they refer to their own. This shared/ directory wouldn’t actually exist in S3; it would be a virtual directory that is grafted on by the GUI.

10. Catalogue service

10.1. Overview

Many of the components of the TEP marketplace are driven by the catalogue. The function of the catalogue is to:

-

keep track of the public and private resources offered by the TEP marketplace

-

keep track of associations between those resources

-

allow searching the catalogue to discover TEP resources

-

bind to the discovered resources

The catalogue for the TEP marketplace is based on the OGC API - Records standard.

10.2. Record

The atomic unit of information in the catalogue is the record. The following table lists the properties of a record:

| Queryables | Description |

|---|---|

recordId |

A unique record identifier assigned by the server. |

recordCreated |

The date this record was created in the server. |

recordUpdated |

The most recent date on which the record was changed. |

links |

A list of links for navigating the API, links for accessing the resource described by this records and links to other resources associated with the resource described by this record, etc. |

type |

The nature or genre of the resource. |

title |

A human-readable name given to the resource. |

description |

A free-text description of the resource. |

keywords |

A list of keywords or tag associated with the resource. |

keywordsCodespace |

A reference to a controlled vocabulary used for the keywords property. |

language |

This refers to the natural language used for textual values. |

externalId |

An identifier for the resource assigned by an external entity. |

created |

The date the resource was created. |

updated |

The more recent date on which the resource was changed. |

publisher |

The entity making the resource available. |

themes |

A knowledge organization system used to classify the resource. |

formats |

A list of available distributions for the resource. |

contactPoint |

An entity to contact about the resource. |

license |

A legal document under which the resource is made available. |

rights |

A statement that concerns all rights not addressed by the license such as a copyright statement. |

extent |

The spatio-temporal coverage of the resource. |

associations |

The OGC API Records specification defines a GeoJSON encoding for a record. The following is an example of the GeoJSON-encoded catalogue record describing a process that computes NDVI and operates on RCM2 and Sentinel2 data:

{

"id": "urn:uuid:66f5318a-b1b6-11ec-bed4-bb5d1e67b39f",

"type": "Feature",

"geometry": null,

"properties": {

"recordCreated": "2022-03-01",

"recordUpdated": "2022-03-25",

"type": "process",

"title": "Calculation of NDVI using the SNAP toolbox for Sentinel-2",

"description": "Calculation of NDVI using the SNAP toolbox for Sentinel-2",

"keywords": [ "NDVI", "SNAP", "process" ],

"language": "en",

"externalId": [

{"value": "http://www.cubewerx.com/cubewerx/cubeserv/tep/processes/NDVICalculation"}

],

"created": "2022-01-18",

"updated": "2222-01-18",

"publisher": "https://www.cubewerx.com",

"formats": [ "image/geo+tiff" ],

"contactPoint": "https://woudc.org/contact.php",

"license": "https://woudc.org/about/data-policy.php",

},

"links": [

{

"rel": "describedBy",

"type": "application/json",

"title": "OGC API Processes Description of the NDVICalculation Process",

"http://www.cubewerx.com/cubewerx/cubeserv/tep/processes/NDVICalculation?f=json"

},

{

"rel": "operatesOn",

"type": "application/json",

"title": "OGC API Coverages Description of the RCM2 Data Collection",

"http://www.cubewerx.com/cubewerx/cubeserv/tep/collections/rcm2?f=json"

},

{

"rel": "operatesOn",

"type": "application/json",

"title": "OGC API Coverages Description of the Sentinel2 Data Collection",

"http://www.cubewerx.com/cubewerx/cubeserv/tep/collections/sentinel2?f=json"

},

{

"rel": "alternate",

"type": "text/html",

"title": "This document as HTML",

"href": "https://www.cubewerx.com/cubewerx/cubeserv/tep/tepcat/item/urn:uuid:66f5318a-b1b6-11ec-bed4-bb5d1e67b39f"

}

]

}10.3. Resource types

The following list of resource types that are expected to be catalgued in the TEP:

-

collections

-

collections of features (vector or rasters)

-

sources (e.g. image sources for coverages)

-

-

collections of data products (e.g. RCM, Sentinel-1, etc.)

-

-

application or processes deployed on the TEP

-

feature access endpoint

-

coverage access endpoints

-

map access endpoints

-

tile access endpoints

-

style documents

10.4. Association types

Associations are expressed in the form <source resource> <association> <target resource>. The following list of associations and expected to exist among catalogues resources:

-

Renders

-

The source of the association RENDERS the target of the association.

-

e.g. Map access endpoint X RENDERS feature collection Y.

-

-

Tiles

-

The source to the association TILES the target of the association.

-

e.g. Tile access endpoint X TILES feature collection Y.

-

-

ParentOf

-

The source the association is the PARENTOF the target of the association.

-

e.g. Feature collection X is the PARENTOF feature Y.

-

-

Styles

-

The source of the association STYLES the target of the association.

-

e.g. Style document X STYLES feature collection Y.

-

-

OperatesOn

-

The source of the association OPERATESON the target of the association.

-

e.g. Application X OPERATESON data product Y.

-

-

Offers

-

The source of association OFFERS the target of the association.

-

e.g. Features access endpoint X OFFERS feature collection Y.

-

-

Describes

-

The source of the association DESCRIBES the target of the association.

-

OpenAPI document X DESCRIBES coverage accesspoint Y.

-

-

Asset

-

The source of the association is an ASSET of the target of the associations.

-

e.g. Thumbnail X is an ASSET of data product Y (i.e. downloadable or streambale data associated with the data product).

-

10.5. Search API

The Records API allows a subset of records to be retrieved from a catalogue using a logically connected set of predicates that may include spatial and/or temporal predicates.

The Records API extends OGC API - Features - Core: Part 1 to provide modern API patterns and encodings to facilitate to facilitate flexible discovery of resource available on the TEP.

The OGC API Features API that has been:

-

extended with additional parameters at the

/collections/{collectionId}/itemsendpoint, -

and constrained to a single information model (i.e. the record).

The following tables summarizes the access paths and relation types of the TEP catalogue search API:

| Path Template | Relation | Resource |

|---|---|---|

Common |

||

none |

Landing page of the catalogue |

|

|

API Description (optional) of the catalogue |

|

|

OGC conformance classes implemented for the catalogue server |

|

|

Metadata about the catalogues available in the TEP. |

|

Metadata describing a specific catalogue with unique identifier |

||

Records |

||

|

The search endpoint for the catalogue with identifier |

|

|

The URL of a specific record in the catalogue with record identifier |

|

Where:

-

{collectionId} = an identifier for the catalogue

-

{recordId} = an identifier for a specific record within a catalogue

|

Note

|

At the moment it is anticipated that the TEP will only have a single catalogue storing all the metadata of the system. However, the API is flexible enough to accommodate multiple catalogues if that becomes a requirement. |

The following tables lists the query parameter that may be used at the search endpoint to query the TEP catalogue:

| Parameter name | Description |

|---|---|

bbox |

A bounding box. If the spatial extent of the record intersects the specified bounding box then the record shall be presented in the response document. |

datetime |

A time instance or time period. If the temporal extent of the record intersects the specified data/time value then the record shall be presented in the response document. |

limit |

The number of records to be presented in a response document. |

q |